In this article we will look in some detail at the new features for offloading storage workloads to the SAN array. This is called VAAI – vStorage APIs for Array Integration and enables the ESXi host to hand over some work to the SAN which the SAN array might be best at doing.

VAAI was introduced in vSphere 4.1 and had three offload features, called primitives, and in 5.0 another option was added for VMFS. We access the VMFS file system by block based methods, i.e. Fibre Channel or iSCSI and as we shall see this could lead to large performance improvements especially for iSCSI. In this first article we will study one of the most useful VAAI primitives.

Clone Blocks / Full Copy / XCOPY (different names for the same feature/primitive)

Let us first look at doing some common actions with a Storage Array which does not support VAAI. When the VMware administrator is for example deploying a new virtual machine from template basically the whole original disk file has to be copied. The same goes when doing a “clone” of a virtual machine or using Storage vMotion.

Since we only speak SCSI commands with the SAN we will first have to read the first parts that has to be copied and then write it to a new location. This is done by sending a read request to the SAN – often in a 32 kB block, the array retrieves the information from the disk system, sends it over the storage network the ESXi host, which then sends a write request to the SAN and delivers the same data back over the network and the storage array writes the information. This has to be done for each piece of the virtual disk files.

For just a small disk of e.g. 4 GB of data this would mean around 260 000 commands from the host – consuming CPU time – and 8 GB of data traffic on the storage network – blocking the bandwidth.

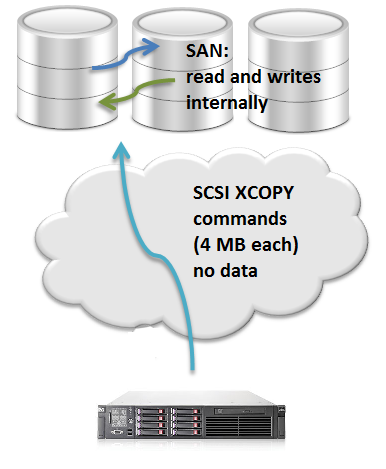

If we have a SAN with VAAI support the situation will be very different. If the new virtual machines should be on the same SAN (not necessarily the same datastore) we can use the CLONING BLOCKS primitive.

We can now simply “tell” the SAN that we want certain blocks duplicated, but without the need for the host to read each byte over the network and then rewrite it. The instructions for cloning are sent in 4 MB chunks. This would mean that for the same 4 GB file (without VAAI: 260 000 commands and 8 GB of storage network traffic) we will have only about 1024 commands and close to zero storage traffic.

The same applies also to Storage vMotion, if the two datastores are on the same SAN. This will allow the storage vMotion actions to consume much less CPU on the hosts and extremely less bandwidth on the storage network.

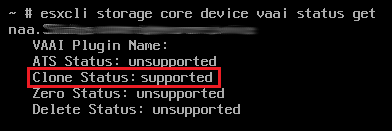

So, how do we know if the VAAI primitive “CLONE BLOCKS” are supported by your SAN? You could naturally check with the SAN vendor, (this might need a firmware update on the array), but it is nice to see from the ESXi side. Unfortunately there is no GUI way to know for sure, so we will have to use the command line.

On ESXi 5.0 you can use either the direct ESXi Shell, SSH or vCLI from Windows or Linux and run the command:

esxcli storage core device vaai status get

Then find your naa id for the specific LUN you are interested in. The Clone Status line shows if the support is available.

The bandwidth saving features of VAAI Clone Blocks are perhaps most likely to have a real good impact on iSCSI based storage, where we today typically use 1 Gbit/s networks, which works well when doing so called random access, but easily gets totally consumed when doing sequential access, like in cloning/deploy from template or Storage vMotion. Numbers from VMware indicates improvements for iSCSI on up to 7 times for these operations.

You meant to say:

The bandwidth saving features of VAAI Clone Blocks are perhaps most likely to have a real good impact on iSCSI based storage, where we today typically use 1 Gbit/s networks”

Rather than:

The bandwidth saving features of VAAI Clone Blocks are perhaps most likely to have a real good impact on iSCSI based storage, where we today typically use 1 GBit/s networks

Thanks Chad, changed to lowercase b in “Bit”.

Hi Rickard, very nice article ….

Would it be more accurate/better to say on the ‘same storage array’ rather than SAN, because one could have 2 or more datastores in the same SAN, however if there datastores are on different storage arrays – (array1 and arrays2 as an example) – and a file copy and/or storage vmotion was performed from one of these datastores to the other, then the VAAI primities would not be invoked.

Also SAN, on its own is really just the Storage Area Network.

Some minor typos for you to correct (if you feel so inclined) ..

“Close to zero storage traffic” — I think you mean to say “close to zero storage network traffic” – there will still be traffic within the storage array that is performing the XCOPY to move disk blocks from disk to disk.

cheers,

Peter

Hello Peter,

and thank for your comment. I totally agree that I sometimes use the term “SAN” in a somewhat non-specific way, often it “means” the Storage Array, like in your very correct point of the need to have the LUNs within the same array to actually use the XCOPY primitive.

The “storage traffic” was in the perspective of the ESXi host and the storage network, not within the array itself. (Even if it could be possible to also have close to zero traffic if the array in some suitable vendor internal way could just relocate the block pointers..) I will look to see if I could clarify these phrasings in the article, I do want to have the correct terms in use.

Thanks again for your comment,

best regards, Rickard