How to troubleshoot Jumbo Frames with VMKping on ESXi hosts or Ping on Windows servers and avoid common mistakes. Learn the correct syntax for VMKping or the ping tests will be useless.

“Jumbo frames” is the ability of using larger Ethernet frames than the default of 1518 bytes. As explained here this could increase the total throughput of the network and also decrease load on both hosts and storage. In this blog post we will see how to verify that Jumbo Frames has been correctly configured and actually is working.

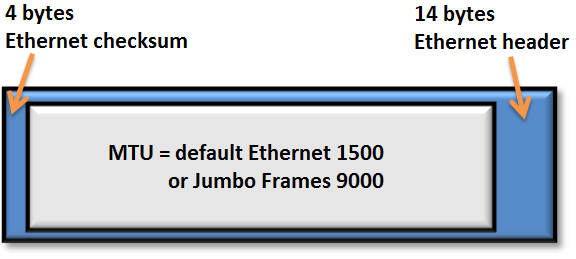

When configuring Jumbo Frames we typically define the new MTU (Maximum Transmission Unit) which really is the payload inside an Ethernet Frame. For ordinary Ethernet the maximum frame size is 1518 byte, where 18 of these are used by Ethernet for header and checksum, which leaves 1500 byte to be carried inside the frame.

Since Jumbo Frames is not really a defined standard there is no actual rule on what MTU size to use, but a very common number is 9000, expanding the payload six times. This also means that the Ethernet frame size is 9018 byte, since MTU defines what could be carried inside the frame.

In VMware ESXi 5.0 there is a lot less work to configure Jumbo Frames than in earlier releases, since we have a GUI interface to change the MTU for both vSwitches and the VMkernel ports. Assuming that this has been done we now want to verify that it is actually working. The best way is to use the ESXi Shell, either directly through the ESXi console or with SSH.

We could now use the vmkping command (vmkernel ping) to try the larger frame sizes. There are however some common mistakes that should be avoided.

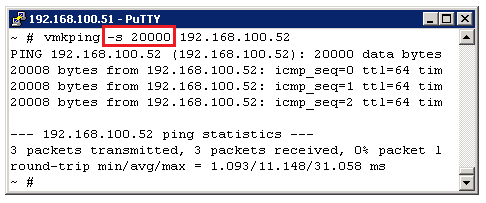

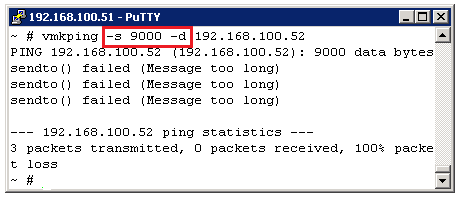

With vmkping we could use the -s option to set the ICMP payload size. The first mistake is to set this to 9000 and then try to ping for example the iSCSI SAN target. If this succeeds, as above, we might think Jumbo Frames has been confirmed end to end, but we have really not.

Let us now try with a payload of 20000 bytes – and it works too! Perhaps really giant jumbo frames on this network? This might seems strange, but what is happening is ordinary IP level fragmentation. When IP becomes aware that it has a packet too large to fit into a frame it will be fragmented and all kind of sizes will seem to work fine. If we actually want to force IP to not “help” us with our Jumbo frames test we must attach the -d flag to vmkping, which forbids IP fragmentation. On a Windows server we would use -f.

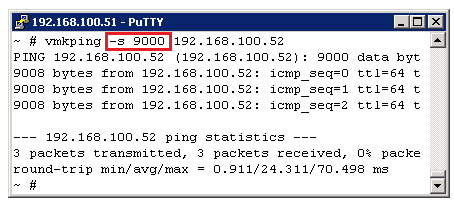

When IP fragmentation is removed we can see (above) that our ping is failing. This might imply that Jumbo Frames is not working, but it could very well do – since there is another common mistake with the vmkping command.

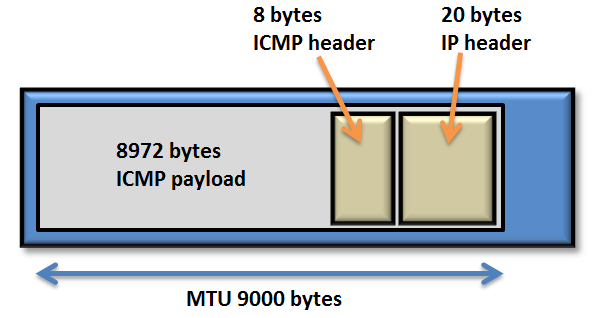

The -s flag for size is not the total packet size, but the ICMP payload, i.e. the number of bytes after the ICMP header. ICMP itself consumes 8 byte and is on top of IP which has a 20 byte header.

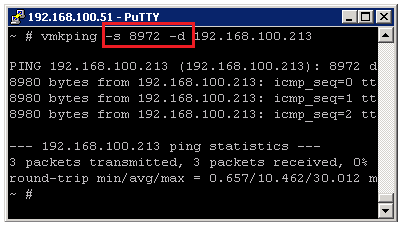

Since the MTU is 9000, but we consume 8+20 for ICMP and IP, we would have to set the payload size to 28 less than 9000, that is 8972 bytes.

When this works then we have finally proven that the Jumbo frame with 9000 MTU is working from our host through the network to the target.

So for ESXi hosts use: vmkping -s 8972 -d IP

On Windows Server we could use ordinary Ping in the command prompt with the options -l (lowercase L) for payload length and -f for Do not Fragment: ping -l 8972 -f IP

Remember to test this from all hosts / servers that should be able to access a storage device with jumbo frames. This verifies both host configuration, but also physical switches through the network.

Thank you for a great guide and good explainations. Helped me figure out a missed MTU 9000 on a vmk port.

Nice to hear that it was helpful Anders. Thank you for your comment,

regards Rickard

Thanks for a clear and lucid explanation of the overhead bytes Rickard – very useful!

Glad to be able to help, thanks for commenting!

Great article, very well written! This helped me with a problem I was working on today.

Nice to hear that Rob, thanks for your comment.

Regards, Rickard

Hi there, I’m having trouble getting this working on my host.

I can do the test as you can here but my Switch cannot see ANY jumbo frames coming from that interface, which would suggest it is still fragmenting the packets for some reason.

Got a thread here if you could help: http://communities.vmware.com/message/2266519#2266519

Hi Rickard, Thank’s for your article.

Thank you for this great post! I was struggling with my ESX hosts and Dell Force10 switches. When I configure an MTU of 9000 on the Dell Force10 Switch Jumboframes was not working.

When I configure a MTU of 9018, everything is working!

Keep up the good work!

Hello Stefan,

and thank you for your comment! Glad that you were able to fix your problem.

It is interesting to note that the switch setting of MTU=9000 should really work IF properly handled by the switch. Since the MTU is really only the payload inside the ethernet frame the “outer” 18 bytes should be implied. It seems however that your switches misunderstood the MTU value and used it like “maxiumum frame size”, which is incorrect.

Please note that if you are using VLAN tagging then the frame size will be 4 bytes extra. It will not affect the MTU, but the total frame size which could be 9022 bytes in that case.

Best regards,

Rickard

A great post. Many thanks.

Thanks , This is very helpful.

Hi Rick – This post was a great help whilst I was tracking down a HP VSA \ ESXi jumbo frames issue and allowed me to track down whether Jumbo frames had been on or off and confirm the state of play – Many thanks

Hi Ricard,

Thank you for this great post, which definitely helps a vm admin or architect.

I have a small query,

Are there any vmware proprietorial tools /command to very the same. OR still this methods is the only easy method to verify the JF flow,

Regards

Raj_Navalgund

Bangalore

Thanks, I have solved my jumbo frame issue thanks to your article.

Thanks for the explanation. However, when I try payload size 8972 with vmkping, it fails, and when I try 8950 and any lower value, it works. Does that mean Jumbo frame is really in action in storage communication? I’m ok to have frame sizes slightly lower than 8972, but want make sure it is not as low as 1518. Kindly advise.