IOPS, latency and throughput and why it is important when troubleshooting storage performance

In this post I will define some common terms regarding storage performance. Later we will see different tools for stressing and measuring this. With “storage” I mean all forms of mechanical disk drives, it could local SATA drives in PCs, it could also be SCSI disks in servers or large amounts of RAID connected expensive drives in a SAN.

The most common value from a disk manufacturer is how much throughput a certain disk can deliver. This number is usually expressed in Megabytes / Second (MB/s) and it is easy to belive that this would be the most important factor to look at. The maximum throughput for a disk could be for example 140 MB/s, however for several reasons this is often not as critical as it seems and we shall not expect to actually achieve that amount of throughput on a typical disk in production. We shall return to this value later.

Next term which is very common is called IOPS. This means IO operations per second, which means the amount of read or write operations that could be done in one seconds time. A certain amount of IO operations will also give a certain throughput of Megabytes each second, so these two are related. A third factor is however involved: the size of each IO request. Depending on the operating system and the application/service that needs disk access it will issue a request to read or write a certain amount of data at the same time. This is called the IO size and could be for example 4 KB, 8 KB, 32 KB and so on. The minimum amount of data to read/write is the size of one sector, which is 512 byte only.

This means:

Average IO size x IOPS = Throughput in MB/s

Each IO request will take some time to complete, this is called the average latency. This latency is measured in milliseconds (ms) and should be as low as possible. There are several factors that would affect this time. Many of them are physical limits due to the mechanical constructs of the traditional hard disk.

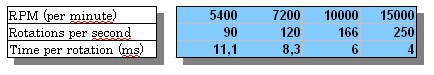

The disk has plates that rotates with a speed expressed in Revolutions Per Minute or “RPM“. That is the number of times the plates will do a full rotate in one minutes time. Since the disk arm and the head (who does to actual read or write) is fixed in one position it will often have to wait for the plate to spin to the right position. Common RPMs is 5400 or 7200 for consumer SATA disks and 10000 or 15000 RPM for high performance server / SAN disks.

This gives:

So for the disk to spin the plate one full rotation takes from 4 to 11 milliseconds depending on the RPM. This is called the Rotational Delay and is important since the disk can at any moment be given an instruction to read at any sector of any track. The disk spins at all times and it is most likely that the correct sector will not (by pure luck) be right under the disks read head, but instead the head will have to literally wait for the plate to spin around for the wanted sector(s) to become reachable.

Next factor for the latency time is movement of the disk arm and head itself, while waiting for the sector to come spinning it would also have to position itself at the exact right track to be able to “catch” the information while it comes flying by. The arm is fixed at one end, but can swing from the inner to the outer part of the disk area (see above) and by so it can reach any position of the disk, even if it has to sometimes wait for the correct area to then spin into its scope. The time it takes to physically move the head is called the seek time. (When looking at the specification of a disk you could see the average seek time, the lower amount of seek time the faster is the movement of the arm.)

Once the arm is in the right position and the moment the plate has rotated enough we can begin to read something. Now depending on the requested IO size this will take different amounts of time. If the IO size was very small (minimum is 512 byte) then the IO is completed after the first sector is read, but if the request was 4 KB or 32 KB or even 128 KB then it would take longer. This is called the transfer delay, which is the amount of time it will take to do the read/write. We will also hope that the next data is located on the next incoming sectors on the same track. Then the arm can wait for more data to roll in and just continue reading. If the data however is located on different parts of the disk we would have to re-position the arm and wait again for the disk to spin. This is why fragmentation on a file system is so hurtful for performance. The more data that is continuously placed on the disk the better.

(An interesting note is that you can actually hear the arm move, that is: the typical intensive clicking noise from a hard disk is the movement of the disk arm. When doing sequential access the disk is very silent.)

There is a relation between the IO size and the IOPS, as in if the IO size is small we could get higher amounts of IOPS and reach a certain amounts of throughput (MB/s). An example from a SATA disk in my computer when running a disk stress tool:

IO size = 4 KB gives IOPS = 29600 (And then IO size x IOPS = Throughput )

4096 x 29600 = 121 MB/s

When doing a test with larger IO requests, 32 KB, the amounts of IOPS drops:

IO size = 32 KB gives IOPS = 3700

32784 x 3700 = 121 MB/s (IO size x IOPS)

So larger IO request sizes could mean less IOPS but still the same amount of throughput.

This leads to the final factor: how much of the requested data is actually located near each other? The term for this is if the data is accessed sequential or “random“. With random we mean that not many IO requests are for continuos data, but instead many small requests located on different parts of the disks. With random access we will get the highest pressure on the disk, because we would have to do the actions that cost time very often – that is: moving the disk arm to a new position and wait for the disk to spin around. Then do some small read or write operation, perhaps 4 KB, and then move on to a new place.

Random access will drop the amounts of IOPS extremely and also the throughput. On the SATA disk I used as example above, which we saw could give us 29600 IOPS of 4 KB = 121 MB/s. High values, but these numbers were for sequential access. If doing the same test but only doing random access we get this result:

IO size (random) x IOPS = Throughput

4 KB x 245 = 0,96 MB/s

So the same disk, but with another access pattern and the performance will drop sharply. The IOPS went from 29000 to 245 and the throughput decreased from 121 MB/s to less than 1 MB/s and this is why throughput is not the most important value to study. It would not matter if this disk was capable of delivering, say, 500 MB/s if the typical access is random, as we would still get just a few MB/second.

Since most of actual disk access from most production systems is small IOs randomly spread over the disk the most critical factors will be to get as many IOPS as possible, with low average latency. This would mean that for a single disk we will want to minimize the rotational delay by having as high RPM as we can (preferably 15K RPM for important servers) and minimize the seek delay when the disk head is moving into position by selecting disks with low average seek time.

As we see that when doing random access IOs with the number of IOPS down to a few hundreds the next natural step will be to add more read heads, that is: add more physical disks (often called spindles) that has its own moving components and group these into RAID systems to get more work done simultaneously.

Or we could look at storage devices without any moving parts, also known as Solid State Drives (SSD).

Hi Rickard,

Above explanation is very good.. However, I would like to know how the calculation is done for IOPS

IO size = 4 KB gives IOPS = 29600

Can you please explain…

Thanks

Hello Saravanan,

and thanks for your comment.

The “iops” is really just the amount of read and write operations each second. This could be measured in a number of ways. If we know the io size, i.e. the average size of each read or write request and the total throughput then we could calculate the number of IO per second this way.

I think Saravanan seek the formula to calculate IOPS.

IOPS = 1/(average seek time)+(average latency)

So look in the spec for your disk. Lets take an example, an average seek time is 8 ms and average latency is 3 ms for a disk at 7200 rpm.

1 / (0,008+0,003) = 90 IOPS

You could try a calculator if you like here.

Thanks. It gives very good and quick information.

Looks like for this IOPS is calculated incorrectly. Note sure why block size of 4KB was multiplied by 1024.

IO size = 4 KB gives IOPS = 29600 (And then IO size x IOPS = Throughput )

4096 x 29600 = 121 MB/s

Actual calculation should have been

IOPS=121*1024/4

Multiple was 1024 was done to convert 121 MB to KB

Looks like for this IOPS is calculated incorrectly. Note sure why block size of 4KB was multiplied by 1024.

IO size = 4 KB gives IOPS = 29600 (And then IO size x IOPS = Throughput )

4096 x 29600 = 121 MB/s

Actual calculation should have been

IOPS=121*1024/4

Multiply was 1024 was done to convert 121 MB to KB

Hello Harsh,

and thank you for your comment.

I do not really follow your question, could you clarify what you find incorrect? The specific example was an observation that when I used an IO size of 4 KB (4096 bytes) the disk achieved around 29600 sequential IOs. The get the throughput, i.e. number of bytes per second, you would take the IOPS (29600) multiplied with the IO size (4096) which is 121241600. This number, to exact, should be divided by 1024 twice to get the “MiB”.

Best regards, Rickard

When we have IO size of 4KBm, how to calculate the IOPS? i understood the throughput calculation.

Holy moly this is the best explanation I’ve read. Thank you so much for this.

Hi

Awesome article. Quick question, it says for random access “throughput is not the most important value” am wondering if you could elaborate. My understanding is that for random – rotation, seek and iops all play a factor and finally thoughput will take all these into consideration. So if for random if iops drops then throughput will also drop. So can we not consider throughput as the final benchmark ??

Thx

Hi.

I am having SSDs.

If IO block size is high, will I get low IOPS even if the storage is not utilized to even 30% of its capacity?

Vaibhav.

so does look like IOPS factors in latency i.e for random read/write – IOPS goes down and impacts throughput. So if we specify that a 4KB size and a 90IOPS with a disk through put X MBPS then we do not really need to worry about latency right as our 90 IOPS will ensure that customers provide a disk with all the right kind of rpm and seek time etc that satisfies 90IOPS requirement. No ??

how to calculate block size if I have read/write average & number of read/write

Very Useful article !! Which counter to use to know I/O size.

Nice Job! Thank you very much!

For the same application/service, IO size is fixed (e.g. 4KB is configured), or it can be changed from one IO request to another?

Sorry I cannot find the answer from your article. Please advise if I missed it.