On the vSphere virtual switch we have the possibility to enable or disable the option of “Notify Switches”. In this post we shall see what this setting actually does and how it works, as well as discussing if the RARP protocol is important.

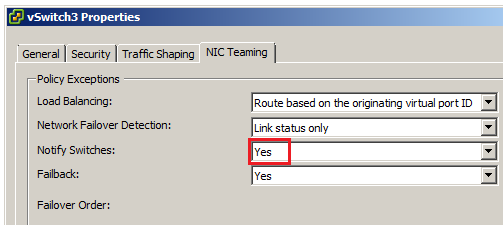

To see the current setting or to change it, select Properties on a vSwitch, then Edit and then the NIC Teaming tab.

When having the value set to Yes it will basically give ESXi the permission to sometimes send faked frames on behalf of the Virtual Machines running on the host.

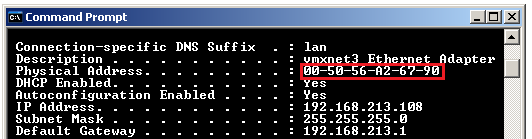

The goal for sending these frames is to make sure the physical switches in the network learns the location of the Virtual Machines. A physical switch does this learning by observing each incoming frame and make a note of the field called Source MAC Address. Based on that information the switches build tables with mappings between MAC addresses and the switch port where this address could be found.

There are at least three different occasions where these messages are sent by ESXi:

1. When a Virtual Machine is powered on the ESXi host will make sure the network are aware of this new VM and its MAC address as well as its physical location (switch port).

2. When a Virtual Machine is moved by vMotion to another host it will be very crucial to rapidly notify the physical network of the new placement of the virtual server.

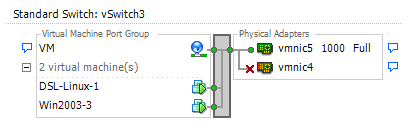

3. If a physical NIC on the host loses the connection, but we had at least two vmnics as uplinks on the vSwitch all traffic from the VMs will be “moved” to the remaining interface. Also in this situation the ESXi host must very quickly inform the network of the new correct uplink to reach the VM.

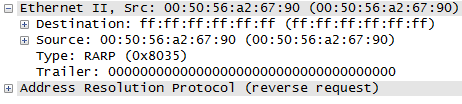

The protocol used is RARP – Reverse Address Resolution Protocol. Sometimes in texts about vSphere it is implied that RARP itself is important and actually does something in this process. However, RARP is a very old and obsolete protocol which almost no operating systems or devices has support for today.

So why send frames that almost nobody could read? The reason is that it is only important that all physical switches sees the Source MAC address and learn the new location and in reality the payload (in this case RARP) could be almost anything.

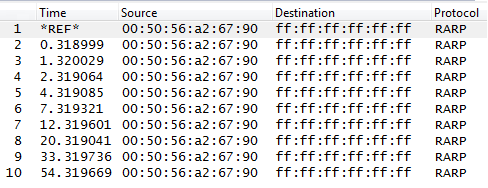

Here we see a virtual machine with a certain MAC address. This VM is being migrated with vMotion and with Wireshark we shall see which RARP frames being sent.

In this packet trace we can notice that the ESXi host is sending RARP frames with the VM as source MAC and also that it wants to be very certain that every switch has been reached by this new information – and actually sends the frame ten times. First several times close together and then more spread in time, with the last frame almost one minute after the first information frame.

How could we be sure that all switches on the physical network does see these frames? This is because of the destination is the special broadcast address FF-FF-FF-FF-FF-FF. The broadcast address forces all switches to forward the frame on all ports and makes the whole broadcast domain aware of the new location.

If the Notify Switches settings for some reason is set to No then the behavior is changed and the host will be silent when actions like VM power-on, vMotion and vmnic link failure take place. It will likely still work, but a much higher risk of packet loss in the above situations.

In summary, the Notify Switches function is very useful and should be set to Yes to allow the ESXi host acting as the virtual machines and sends faked frames to make sure all physical switches MAC-to-port mapping tables are quickly updated.

Hi,

Really great information from you, now I got the very in depth theory of the RARP in ESXi. Thanks for your effort.

/ Gopi

We are likely facing the situation explined in – http://kb.vmware.com/kb/1556.

After seeing your excellent explanation of this functionality, I dont want to loose this, so is there a way that I can still leave this setting to Yes but some how instruct the host to use the bogus MAC address (in its RARP) for each NLB guest?

If I register static arp entries on the hosts for each NLB guest, will this force the hosts to use such bogus MAC in RARP? Any help would be much appreciated, thanks in advance.

Hello Mohan,

and thank you for your reply.

From my understanding of Microsoft NLB Clusters I think the key point is that the NLB machines (physical or virtual) depend on that switches never learn their true position and always floods every incoming frame to all destination ports, making sure this hits all the NLB machines.

Because of this it should be recommended to keep the NLB machines into their own VLAN to limit the frame flooding. If so you should be able to disable the “VMware Notify Switches” setting on these portgroups only, but keep the rest of your VM portgroups with the Notify setting enabled.

This is great information for me to understand why vMotion sends RARP packets after migration is over. But one question is that what if the server is connected with a Layer 3 switch/router directly ?

If RARP is not processed by switch/router (which is commonly seen now), this means that MAC:Port is updated by RARP packet but IP:MAC not. This could make influence on data traffic.

Thank you for your comment wildwill.

Does your question concerns having one ESXi host directly connected to a routing device with no layer two switching between and another ESXi on the remote side of the router?

For vMotion to actually work you would need to have a common layer two infrastructure, both for the vmkernel adapters with vMotion tag and for all Virtual Machine networks. That would mean that connecting two ESXi host to opposite sides of a router would not work with vMotion. (It could be done with different tunneling techniques, but not out of the box.)

As for the relation IP to MAC, typically done with classic ARP resolution, the Virtual Machine itself is not in any way changed in the vMotion process. This means the virtual network card will keep the same MAC address and the TCP/IP stack inside the guest operating system will have the same IP address.

The effect of that nothing changes inside the guest is that in a vMotion transfer there is also no need to change or update any existing ARP entries on any external devices.

Please return if something is unclear,

best regards, Rickard

I am having a problem where a vmotion completes successfully but the VM drop 4-6 pings instead of losing 1 or no pings. I have been troubleshooting this issue for several days and found your article helpful in explaining things, but I still have not solved the problem. I have the notify switches setting set on all hosts. I tried running wireshark on a vm that successfully vmotions with 1 or no ping drops (and vmotion that drops 4-6 pings) and I don’t see the rarp packets in either trace. All the ESXi logs seem to show the vMotion is successful, but for some reason the network switchover is slow. Any ideas?

Andrew, even I’m facing problem similar to what you’ve mentioned. Were you able to find the root cause?

Great explanation Rickard!

Thx!

I wonder why not to send a gratuitous ARP instead: much more supported, not obsolete, and makes the same effect.

Anyone knows?

Hello Pablo,

the main reason in my opinion is that it does not matter WHAT you send, the only thing important is that SOMETHING is sent with the hardware address of the VM as source MAC in the ethernet frame.

The gratuitous ARP is not really needed since there is no change in the relation between IP and MAC, and it might possible disturb more machines on the network since everyone will process ARP (ethertype 0806), but rarely RARP (8035).

Regards, Rickard

Great writing. I will read more. Subscribing.

Great explanation, thanks!

please any answer for this comment

I am having a problem where a vmotion completes successfully but the VM drop 4-6 pings instead of losing 1 or no pings. I have been troubleshooting this issue for several days and found your article helpful in explaining things, but I still have not solved the problem. I have the notify switches setting set on all hosts. I tried running wireshark on a vm that successfully vmotions with 1 or no ping drops (and vmotion that drops 4-6 pings) and I don’t see the rarp packets in either trace. All the ESXi logs seem to show the vMotion is successful, but for some reason the network switchover is slow. Any ideas?

Great article, though also experiencing this issue. Hope you can explain.

I am having a problem where a vmotion completes successfully but the VM drop 4-6 pings instead of losing 1 or no pings. I have been troubleshooting this issue for several days and found your article helpful in explaining things, but I still have not solved the problem. I have the notify switches setting set on all hosts. I tried running wireshark on a vm that successfully vmotions with 1 or no ping drops (and vmotion that drops 4-6 pings) and I don’t see the rarp packets in either trace. All the ESXi logs seem to show the vMotion is successful, but for some reason the network switchover is slow. Any ideas?

Thanks for your comment!

The RARP packets themselves will actually not be seen inside the VM who “sends” them, as it is the VMkernel that does this on its own, pretending to be the VM. If you place the Wireshark on some other port on the same physical VLAN you should be able to see them.

As for the general questions, it is unfortunately very difficult to give any advice due to the many factors of the whole configuration unknown.

Best regards, Rickard

This is a best document i have found related to topic, Someone refer me this link and found my problem solved easily.

Good article, thank you.

Very cool article indeed.

But back to 2022 with DVS 6.6 i’m still not able to say to Network team if my DVS is sending DVS wide, ESXi wide or VM wide arp table 🙂

I still have RAPR storm broadcast and don’t really know why (+19000 RARP request in 5 minutes)

Any technical documentation on DVS 6.6 and RARP to advise?

thanks 🙂

The issue is most modern network switches just ignore RARP entirely. So they don’t see the signaling at all that the MAC has moved to a new port.

The other issue is that many networks are delivered on top of L3 EVPN Fabrics, which send type2 mac/ip routes to update other switches and identify where a MAC and IP address live on the network. GARPs will update both the MAC/IP and the port and allow the switch to send a new type2 mac/ip evpn route to all the other switches in the fabric to inform them of where the VM now lives.

Thanks for your comment, Eric. A late reply:

“The issue is most modern network switches just ignore RARP entirely. So they don’t see the signaling at all that the MAC has moved to a new port.”

I would argue that no device, either a switch or an operating system, supports RARP. However, that is not really necessary. The only important part is that this frame is passed on through the network, like any other Ethernet broadcast frame, and that the physical switches, automatically, as with any other frame regardless of the protocol, study the source MAC address field.

Best regards, Rickard

Hi Rickard,

I have the problem, that after a failover the gratuitous ARP is not delivered outside the vSphere cluster and so the clusterservice isn’t reachable till the arp cache of the network components is cleared. Is the ‘notifiy switches’ option the resolution or is there another option to configure?

Thanx Karl

Hello Carl,

And thanks for your question. I don’t know what specific type of cluster service you are using, but you mention ARP and the ARP caches at some devices. If that being the case, I would say that the “Notify Switches” in not the solution.

ARP is the relationship between Layer 3 (IPv4) and Layer 2 (the MAC address). Issues with ARP caches = the MAC address has changed, and a flush of the cache is required. A virtual machine being vMotioned or restarted at another host will remain the IP address AND the MAC address, so no flushing of ARP caches is required.

The RARP protocol used with the “Notify Switches” feature is only used for an indirect purpose: to inform the switches of the relationship between Layer 2 (the VM MAC address) and Layer 1 (the physical interface in the physical network). This is due to while the VM MAC address has not changed, the location in the network is now different.

Best regards, Rickard