How to reclaim disk space from virtual machines with thin virtual disks in VMware vSphere.

The thin disks will start very small and grow as data is added within the VM, however if data is logically deleted inside the VM the thin disk will not shrink. If there is a large difference between the amount of data stored by the VM and the space consumed by the virtual disk file it could be worth to reclaim the space.

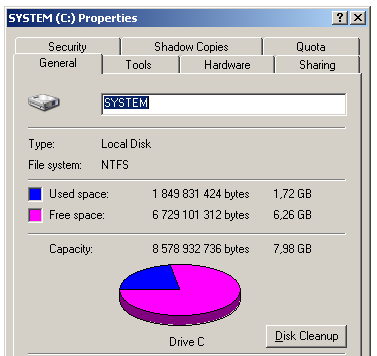

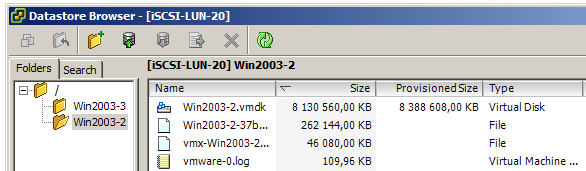

In this example we have a thin provisioned VMDK file with the size of 8 GB, which is the size of the disk as it appears to the virtual machine, in this case a Windows 2003 Server.

Inside the guest VM we can see that the used space are about 1.72 GB out of this 8 GB and there is a large percentage of free disk.

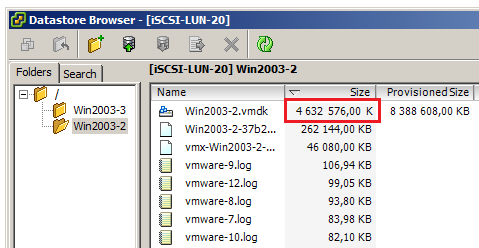

From the Datastore Browser from the vSphere Client we could however see that the VMDK file is consuming around 4.6 GB on the datastore, even as the VM itself only stores 1.7 GB. Most likely this condition comes from files that once existed inside the VM and later has been deleted.

When files are deleted inside Windows only the logical information about the files are removed, but the physical content of the file is left on the disk area, later to be overwritten by other files.

Two problems must now be solved:

1. Since the VMkernel has no possibility to read or understand guest filesystems like NTFS we can never reclaim the space until these blocks are actually zeroed from inside the guest. We must use some extra tool for this.

2. There are no real shrink function in vSphere, but we could use a trick involving datastores with different VMFS blocksizes together with Storage vMotion.

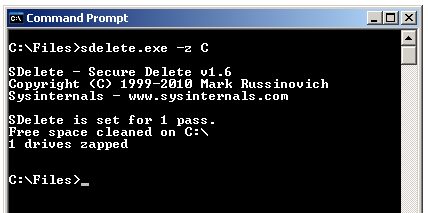

To solve the first problem we could use a tool like Sdelete.exe from the Sysinternals suite, available as free download from Microsoft. Get the file and extract it inside the VM.

Make a note of the drive letter for the partition that we want to reclaim space from and run:

sdelete -z DRIVELETTER

This tool will now write zeros into every empty part of the partition. This means that you will get a very large amount of write IOs going to the storage, which makes this most suitable to do off peak hours.

This will also make the VMDK file to expand to its full size.

NOTE: It is very important to make sure in advance that there is enough space in the current datastore for this.

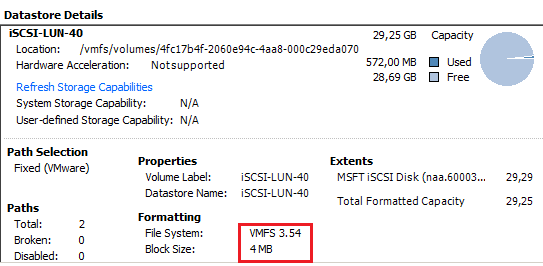

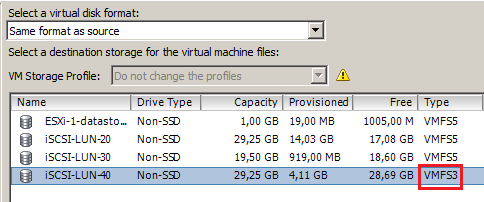

The next step is to locate a VMFS datastore with a different block size than the currect datastore. This seems strange, but is crucial and it will not work if using equal blocksizes. If using VMFS 5 the option of actually selecting the blocksize is no longer available and all new VMFS 5 datastores are created with the blocksize of 1 MB. This is good and removes other issues, but means that you need one VMFS 3 datastore during the reclaim phase.

If you only use VMFS 5 then you might have to create a new LUN on the Fibre Channel or iSCSI SAN and format this with VMFS 3. Make sure to select a different block size than the current datastore. The LUN is only used temporarily and should in size just be enough the hold the VM during the reclaim process.

(If creating a new VMFS3 datastore you should typically select 8 MB block size which will allow the maximum VMDK file sizes.)

Select the datastore and view the Datastore Details to verify the block size. In this case it is 4 MB and will work to do the transfer from the 1 MB blocksize of the current datastore.

Then use Storage vMotion to transfer the VM disk to the VMFS 3 datastore. Depending on the size of the VMDK and the performance of the SAN and the transfer method (FC or iSCSI) this will take some time naturally.

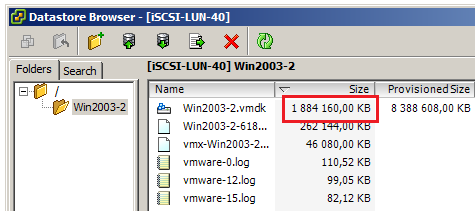

After the Storage vMotion is complete you should use the Datastore Browser on the new datastore and see how much space was reclaimed. In this case we see that the VMDK file size now match almost exactly the amount of data consumed by the internal Windows guest and all “dead space” has been restored to the datastore.

The final step is now to transfer the VM back to its original location. Since these actions (first zeroing with the sdelete tool and two Storage vMotions) are very disk intensive it could be best to accomplish these actions during non peak hours.

Hello Rickard,

Useful technic.

Assuming I can find a way to do step 1 on a particular VM, do you know if this method works with any type of VM regardless of the guest OS?

Regards

Hi Rodrigo,

and thank you for your comment. This will work with any Guest Operating System type as long as you can make sure the unused parts of the internal file system is zeroed. In Linux you should be able to use tools like dd or similar.

We are in 2015 and your tutorial is always the best:

– run properly with vsphere 5.1

– run without having to stop the guest OS.

Thank you Rick.

Glad to hear that Olaf!

It would be nice however with this functionality built in… 🙂

Hi,

All looks good for what we need to do this for but we are dealing with a VMDK which is 8TB in size, so VMFS3 will not work as it doesn’t support files of this size.

Do we have any other options using your method?

Thanks

Darren

Hello Darren,

interesting question! The key is that the other datastore should have a different block size and this is not available with VMFS5.

One possible way:

Create a LUN at some size below 2 TB.

Create a VMFS3 datastore on this LUN with some other block size than 1 MB.

Upgrade the datastore to VMFS5. This will keep the block size.

Now expand the LUN from the array up to 8 TB and then expand the VMFS datastore.

Test. 🙂

Regards, Rickard

Dear Rickard,

Thanks for your article which is current for my work need.

My company environment: vSphere vCenter 5.1. Currently, we want to shrink a datastore for a VM on it. So have other method to reclaim this unuseful datastore? Storage vMotion to different Block Size is inconvenient for our environment. The best is don’t have a outage for VM. Thanks.

Hello Hellen,

What do you exactly want to do? Shrink a datastore, that is, the whole VMFS datastore? Or reduce the size of a virtual machine thin VMDK file?

Best regards, Rickard

Hello Rickard,

Thanks for your kindly reply.

I want to shrink a VM for unuse space on datastore, I think temporary build a different datastore type (VMFS3) is inconvenient for our production datastore as VMFS5 and storage vMotion is need longer time for this VM (current size is 600GB). So there is have another method is use command line to shrink which is “vmkfstools” but it need shutdown server to running. So is it possible have third method to fit our current environment? or what do you suggestion for which one is better met our environment for above two method? Thanks.

Hello Hellen,

for what reason is setting up an alternative VMFS3 datastore inconvenient for you? Is there a lack of free space or other issues?

If you do have the amount of free space on SOME storage that is enough for the VM then you could create the datastore as described above and with Storage vMotion just move it and then migrate it back. This method gets no downtime for the VM at all. Moving a 600 GB virtual machine will typically not take that much time and if you do the migrations at non-peak hours then the impact is typically low.

Regards, Rickard

Dear All,

How to shrink or resize the vm datastore?

Current datastore size is 5 TB, want to reduce the same to 3 TB.i need you’re help

Hello,

unfortunately that is not possible. You must move all your virtual machines to another datastore, then erase and recreate the LUN with a smaller size.

Regards, Rickard

can I also achieve this by using NFS storage?

NFS didn’t work with the hameWin NFS server I setup.

I also see VMFS3 is no longer supported in vSphere 6. – https://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2093576

Now what can I do??

Hi Rickard,

I have a question with regards to this article about thin provisioned vmdk files in VMware. I understand the whole point that a thin disk does not “release” the space that has been deleted within the guest OS, but what would happen if the thin provisioned vmdk file gets to it’s capacity, and the VMware datastore that holds this vmdk file is over provisioned?

Will the data on the vmdk file be overwritten once it reaches it’s maximum size?

As data within the guest OS is written and deleted over time, obviously this data within the vmdk does not shrink, but at what point is it overwritten?

I have query about thick to thin migration.

I have exchange server and all 20 disks provisioned thick eager zero and lazy zero and total size of exchange vm is 16 TB.

I have created 1 datastore with 10 TB size. So it possible to migrate exchange vms to thin on 10 TB datastore or need same size of exchange vm( 16 TB ) datastore to migrate to thin?