How to enable and verify Ethernet Flow Control for VMware ESXi with iSCSI / NFS. Flow Control could help physical switches to prevent frame drops under very high network traffic congestion. Flow Control is typically used in IP storage networks. The Ethernet standard 802.3x defines the usage of Flow Control and the Pause Frame fields.

It is often recommended from storage vendors to enable Flow Control on the Ethernet networks used for IP based storage (iSCSI and NFS). In this blog post we shall see how to enable Flow Control on ESXi and the physical switches and also how to verify on both sides that Flow Control has been successfully negotiated.

Verify with your specific SAN / NFS vendor what their recommendations are for Flow Control for the storage network with ESXi.

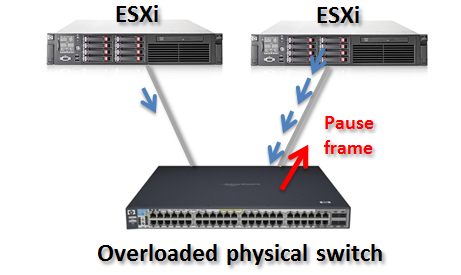

Flow Control was defined in the 802.3x standard as early as 1997, but is not really commonly used. However it could be useful in certain network types, such as those specific for the IP based storage. The idea with Ethernet Flow Control is that if a networking device, e.g. a switch port, is overwhelmed with incoming data and the output queues are full it could send a PAUSE request to its neighbor to hold off any outstanding frames for a number of nanoseconds.

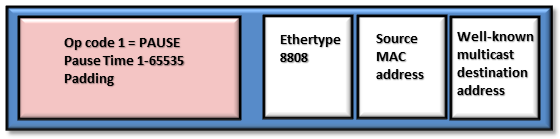

The PAUSE period on 1 Gbit/s ports can be maximum 34 milliseconds. The actual PAUSE time is selected by the sender. The destination address is a specific Multicast address which a 802.1D compatible switch is not allowed to forward to any other device. That is, the PAUSE frame is only link local between a node and the switch port.

We shall note that we typically expect Layer 4 protocols as TCP to handle general flow control, together with data checksums, acknowledgements, segment reassembling and more. TCP works very good, but is originally constructed to be able to handle sessions with very high latency. In a low latency network like a IP storage (iSCSI / NFS) network the TCP retransmission handling in case of lost frames could be less effective.

Flow Control 802.3x works directly on the Layer 2 layer and should be able to kick in earlier than TCP, in the sense that a switchport which predicts having its queues overflowed and soon forced to drop frames could ask the sender to pause the transmissions for a short while avoid frame drops and TCP retransmissions.

To be able to use Flow Control both sides must support it, in this case the ESXi host physical network adapter and the switch ports. Flow Control could be set in a static mode, but could also use negotiation with the remote side to verify that Flow Control indeed is supported. This means the physical switch ports and ESXi must be configured to use 802.3x Flow Control negotiation.

In ESXi the default setting on the vmnics (physical network ports) is to use Flow Control negotiation.

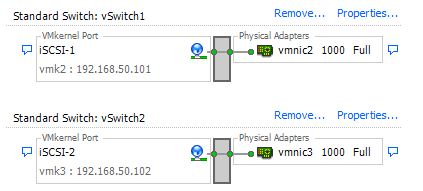

Check which vmnic(s) that connect to the vSwitch(es) used for IP storage. In this example we shall study how to enable Flow Control for vmnic2 and vmnic3.

You could then verify the settings on ESXi by using the command line interface (ESXi Shell or SSH) and the command:

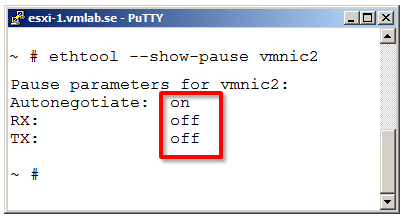

ethtool –show-pause vmnic2

Above we note that Autonegotiating is enabled, but has not found any Flow Control enabled switch port and by that both receiving (RX) and transceiving (TX) pause frames is off. Note the double dash before the show-pause parameter.

“Off” on RX and TX does not indicate that Flow-Control is disabled, but just has not been successfully negotiated with the remote physical switch port.

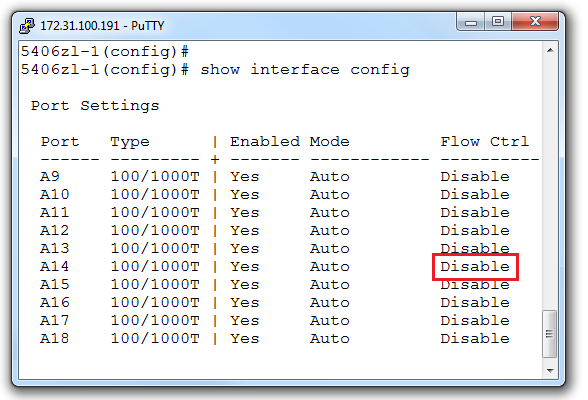

We will now check and enable Flow Control on the physical switch. In this case the model is HP Procurve 5406-zl. The switch port A14 is connected to the ESXi host. With the command “show interface config” we can see the current configuration state of Flow Control. Above we notice that the config mode for the port to ESXi (A14) is “Disable“, which means that Flow Control is logically unused. No negotiation with remote side is initiated and incoming Flow Control frames are ignored.

This is the default setting on HP Procurve network switches. Check with your specific switch type what the default value is and how to change / verify. The commands shown in this post work on all Procurve (E-series) devices with Flow Control support.

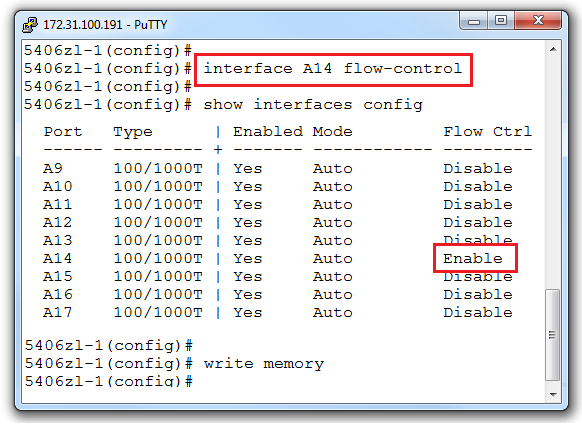

To activate Flow Control per port use the command: “interface A14 flow-control“. This instructs the switchport to try to find a Flow Control partner on the remote side through 802.3x negotiation.

In the output above “Enable” just means that it should try to negotiate Flow Control with the host, but does not tell if it actually has found any 802.3x partner or not.

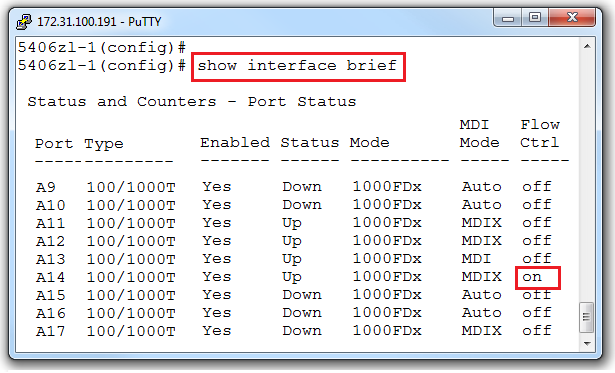

To know if this was successful we use a different command: “show interface brief“. If the Flow Ctrl output for the specific port (here A14) shows “on” this means that it went well and the switch port has actually negotiated with the remote side, in this case the ESXi host.

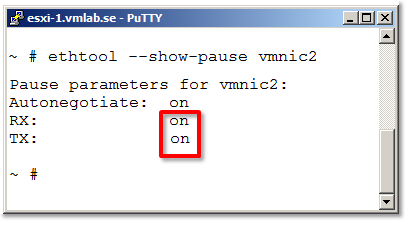

Back on the ESXi host we can also verify the ESXi host Flow Control state.

With the command “eth-tool –show-pause vmnic2” we can finally see that both RX and TX are now in mode on. If necessary the switch could now ask the ESXi host to pause for a brief moment if, for example, the uplink into the iSCSI target / NFS server is overwhelmed.

Flow Control should be enabled if the storage vendor for the iSCSI or NFS solution recommends it and could be helpful for dealing with short periods of bandwidth overload. The ESXi Flow Control Auto-Negotiation is always enabled and depending on the switch vendor it must be configured properly on the ports attached to the ESXi hosts IP storage vmnic ports.

Hello, nice article – i am dealing with high “drops tx” on the iscsi esxi nics and (and temporary datastore disconnect), i think that disabled flow control on the switch could be a issue. Do i need to enable also flow control on iscsi storage ports?

Hello Tomas,

if your ESXi host is default installed you should not have to change the Flow Control settings on the host itself. Just make sure the physical switch is set to auto negotiate Flow.

You could use the eth-tool before and after the verify that it worked.

There are many possible causes to your iSCSI problems, but it is worth to enable this and see if anything changes.

Sorry, maybe my question was bad. I’ve already enabled flow control on ports from switch to host. But i am wondering if i should also enable flow control on ports from switch to iscsi storage? If i take look on switch statistics there is big number of “drops tx” on ports from switch to esxi host, but there are few also on ports from switch to storage… Thank you

If your iSCSI SAN vendor recommends it and the SAN nics actually could send/accept PAUSE frames then it would be good.

If many ESXi host simulanteously are sending lots of data to the SAN one bottleneck could be the switch ports leading into the SAN. Flow Control from the SAN side could help the iSCSI Target to be able to handle incoming data better.

Hello again! After 14 days i can say – yes!!! Flow-control helps me with my problems with Dell MD3000i. Thank you!

Nice to hear that your problems was solved Tomas and thanks for reporting back.

Regards / Rickard

Great article. I’ve seach for info in regards to ESXI and pause frames and every blog/article refers to the switch sending pause frames, but what would cause a ESXI host to send pause frames and how do you troubleshoot that issue?

Hello Jeff,

and thank you for your comment.

The ESXi host could send PAUSE frames if it belives that the number of incoming frames are to great to handle. This should however be unusual.

Do you see on your physical swiches that the hosts do send flow control frames and pause the incoming frames?

Regards, Rickard

Yes, the esxi host is connected to my nexus 7k at 10G. I had flowcontrol turned off by default and notice that I was receiving rx pause from coming from one of the 4 10G nics from the one host. I enabled flowcontrol on the nexus and the switch and host negotiated flowcontrol ,which improved performance. I still see a lot of pause frames coming from the host.

It could be that the NIC is having some issues, like: http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2044681

1. Could slight, but constant increase of TX errors observed on a switch port be an indicator of some flowcontrol incompatibility between the switch and the ESXi host?

2. If I don’t see any traces of flowcontrol by running ‘esxcli system module parameters list –module xxx’ does it mean the NIC doesn’t support it (mine are igb and ixgbe)?

Thank you.