In vSphere 5 we have support for using multiple Vmkernel ports enabled for vMotion. Having more than one physical network card for vMotion allows us to utilize them simultaneous, even when only transferring a single virtual machine. If having spare NICs we could use up to 16 Gigabit cards and four 10 Gbit NICs just for vMotion. Having 16 extra network ports might not be too common, but the performance increase can be great when just adding another vmnic for the vMotion traffic.

There are however some undocumented configuration that must be done to make this work.

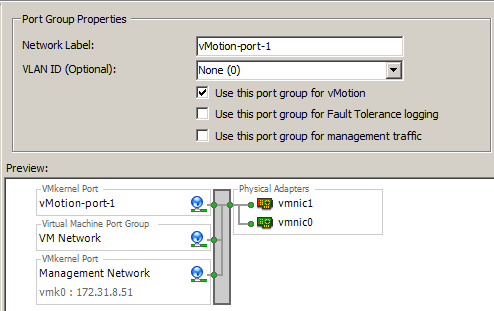

The first step is to add two or more physical NIC ports (VMNICs) to the vSwitch and then create the same number of Vmkernel port on each host and give them suitable IP addresses, all on the same subnet/VLAN. Make sure to select the vMotion option on each Vmkernel port. (This action was not possible on 4.1.)

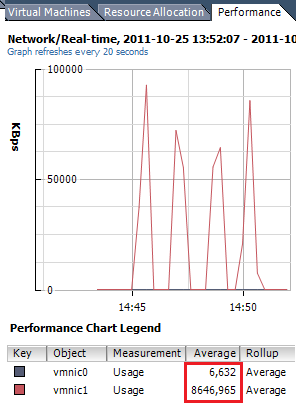

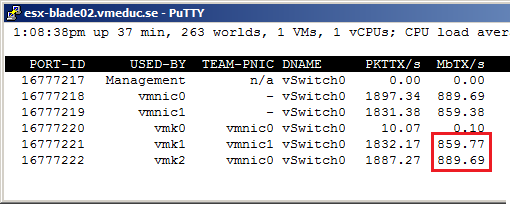

We might think that this is enough configuration, and it will work, but unfortunately only use one of the VMNICs while the rest are only for failover purposes. As we see above when doing a vMotion and study the traffic over the network card we notice that only one is actually used.

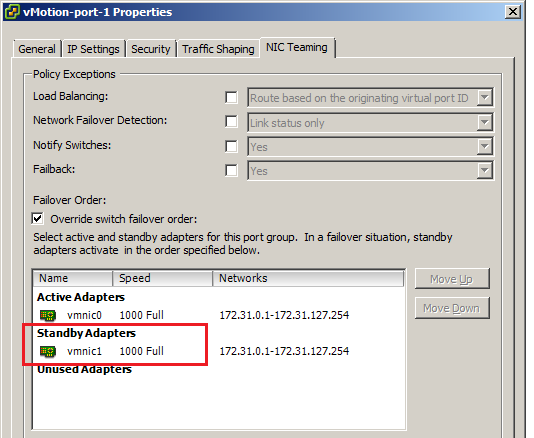

We must now do some configuration that for some reason is missing from the official manuals and courseware. What has to be changed is somewhat similar as how to enable multipath support for iSCSI in vSphere 4.1. This means that we will have to “bind” the Vmkernel ports so each port has a direct channel to a physical network card (vmnic).

Enter the properties for the vSwitch, go to the first vMotion enabled VMkernel port and select NIC Teaming. Click “Override switch failover order” and then move the second vmnic down to standby.

Do the exact same thing with the second vMotion port, but make sure that the second vmnic stays up as active and the first vmnic is moved down to standby.

This has to be done on all hosts. When now doing a vMotion of a single virtual machine all vmnics will be used to transfer the VM extremely fast over the vMotion network.

Above is a view from the command line performance tool ESXTOP and we can notice that both vmnic0 and vmnic1 are now almost fully utilized.