How to calculate the usable bandwidth on a Gigabit Ethernet network.

In this article we will look at how much throughput of actual data we have on a Gigabit Ethernet based network and if this will increase by using Jumbo Frames.

How much of this is user data and how much is overhead?

The bandwidth on a Gigabit Ethernet network is defined that a node could send 1 000 000 000 bits each second, that is one billion 1 or 0s every second. Bits are most often combined into bytes and since eight bits make up one byte this will give the possibility to transfer 125 000 000 bytes per second (1000000000 / 8).

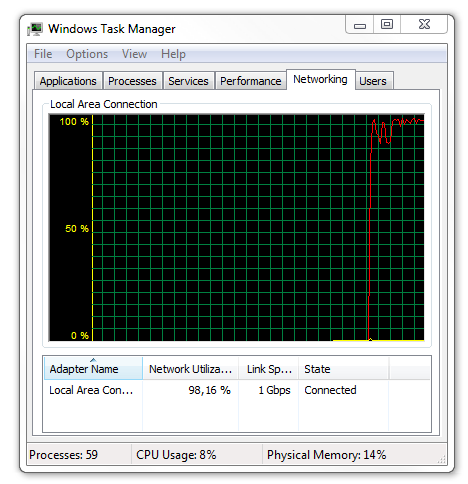

Unfortunately not all of these 125000000 bytes/second can be used to send data as we have multiple layers of overhead. As you may be aware of, the data transferred over a Ethernet based network must be divided into “frames”. The size of these frames regulates the maximum number of bytes to send together. The maximum frame size for Ethernet has been 1518 byte for the last 25 years or more.

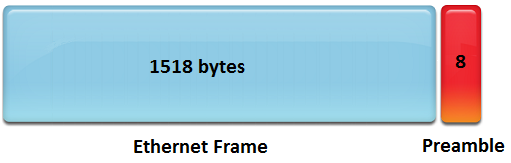

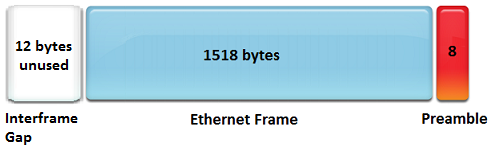

Each frame will cause some overhead, both inside the frame but less known also on the “outside”. Before each frame is sent there is certain combination of bits that must be transmitted, called the Preamble, which basically signals to the receiver that a frame is coming right behind it. The preamble is 8 bytes and is sent just before each and every frame.

When the main body of the frame (1518 byte) has been transferred we might want to send another one. Since we are not using the old CSMA/CD access method (used only for half duplex) we do not have to “sense the cable” to see if it is free – which would cost time, but the Ethernet standard defines that for full duplex transmissions there has to be a certain amount of idle bytes before next frame is sent onto the wire.

This is called the Interframe Gap and is 12 bytes long. So between all frames we have to leave at least 12 bytes “empty” to give the receiver side the time needed to prepare for the next incoming frame.

This will mean that each frame actually uses:

12 empty bytes of Interframe Gap + 1518 bytes of frame data + 8 bytes of preamble = 1538

This makes that each frame actually consumes 1538 bytes of bandwidth and if we remember that we had “time slots” for sending 125000000 bytes each second this will allow space for 81274 frames per second. (125000000 / 1538)

So on default Gigabit Ethernet we can transmit over 81000 full size frames each second, a quite impressive number. Since we are running full duplex we could at the same time receive 81000 frames too!

We shall continue to study the overhead for this. So for each frame, we lose 12 + 8 bytes used for Interframe Gap and Preamble, which could be considered to be “outside” of the frame, but could we use the rest to send our actual data? No, there is some more overhead that will be going on.

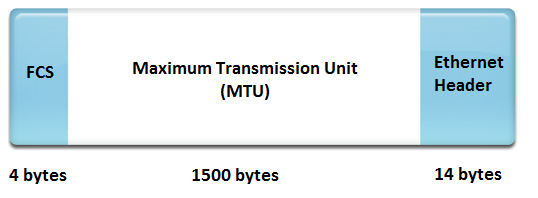

The first 14 byte of the frame will be used for the Ethernet header and the last 4 bytes will contain a checksum trying to detect transfer errors. This uses the CRC32 checksum algorithm and is called the Frame Check Sequence (FCS).

This means that we lose a total of 18 bytes in overhead for the Ethernet header in the beginning and the checksum at the end. (The blue parts above could be seen as something like a “frame” around the data carried inside.) The number of bytes left is called the Maximum Transmission Unit (MTU) and will be 1500 bytes on default Ethernet. MTU is the payload that could be carried inside an Ethernet frame, see picture above. It is a common misunderstanding that MTU is the frame size, but really is the data inside the frame only.

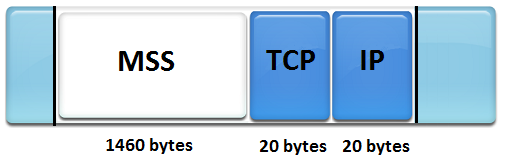

Just behind the Ethernet header we will most likely find the IP header. If using ordinary IPv4 this header will be 20 bytes long. And behind the IP header we will also most likely find the TCP header, which have the same length of 20 bytes. The amount of data that could be transferred in each TCP segment is called the Maximum Segment Size (MSS) and is typically 1460 bytes.

So the Ethernet header and checksum plus the IP and TCP headers will together add 58 bytes to the overhead. Adding the Interframe Gap and the Preamble gives 20 more. So for each 1460 bytes of data sent we have a minimum of 78 extra bytes handling the transfer at different layers. All of these are very important, but does cause an overhead at the same time.

As noted in the beginning of this article we had the possibility to send 125000000 bytes/second on Gigabit Ethernet. When each frame consumes 1538 byte of bandwidth that gave us 81274 frames/second (125000000 / 1538). If each frame carries a maximum of 1460 bytes of user data this means that we could transfer 118660598 data bytes per second (81274 frames x 1460 byte of data), i.e. around 118 MB/s.

This means that when using default Ethernet frame size of 1518 byte (MTU = 1500) we have an efficiency of around 94% (118660598 / 125000000), meaning that the other 6% is used for the protocols at various layer, which we could call overhead.

If enabling so called Jumbo Frames on all equipment, we could have a potential increase in the actual bandwidth used for our data. Let us look at that.

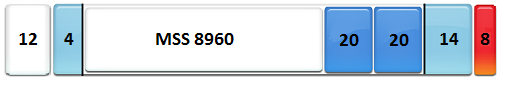

A commonly used MTU value for Jumbo Frames is 9000. First we would have to add the overhead for Ethernet (14+4 bytes), Preamble (8 bytes) and Interframe Gap (12 bytes). This makes will make the frame consume 9038 bytes of bandwidth and from the total amount of 125000000 bytes available to send each second we will have a total of 13830 jumbo frames (125000000 / 9038). So a lot less frames than the 81000 normal sized frames, but we will be able to carry more data inside each of the frames and by that reduce the network overhead.

(There are also other types of overhead, like CPU time in hosts and the work done at network interface cards, switches and routers, but in this article we will only look at the bandwidth usage.)

If we remove the overhead for Interframe Gap, Ethernet CRC, TCP, IP, Ethernet header and the Preamble we would end up with 8960 bytes of data inside each TCP segment. This means that the Maximum Segment Size, the MSS, is 8960 byte and is a lot larger than default 1460 byte. A MSS of 8960 multiplied with 13830 (number of frames) gives 123916800 bytes for user data.

This will give us a really great efficiency, of 99% (123916800 / 125000000). So by increasing the frame size we would have almost five percent more bandwidth available for data, compared to about 94% for default frame size.

Conclusion: Default Gigabit Ethernet has an impressive number of frames (about 81000 per second) possible and a high throughput for actual data (about 118 MB/s). By increasing the MTU to 9000 we could deliver even more data on the same bandwidth, up to 123 MB/s, thanks to the decreased amount of overhead due to a lower number of frames. Jumbo Frames could use the whole of 99% of Gigabit Ethernet bandwidth to carry our data.

I believe there is a minor error in the math that you did, check out the link below where it show that you can use a smaller size for IFG on Gigabit interfaces (8 instead of 12 bytes).

– http://en.wikipedia.org/wiki/Interframe_gap

Hello Christian,

and thank you for your reply. It is an interesting question where most sources (and I belive the 802.3 standard) says you must wait 96 nanoseconds between each transmitted frame, which for Gigabit Ethernet is the same as 96 bit times, which is 12 bytes “space”.

The information on the Wikipedia link seems to say to you could lower the Interframe Gap time to 64 bit times (8 bytes), but is quite vague on how this is done in practice and if specific network cards and switches are needed for that to work. I shall see if I can find any more information on this.

Regards, Rickard

Top article mate. Loved it.

Can you run this calculation for smallest Ethernet Frame size of 64bytes on a 10G network? My calculations using this formula seem way too low.

Hello Elison,

if using the minimum frame size and still assuming using TCP the throughput will be quite low, probably just as you have calculated.

The full size would be:

8 preamble

14 ethernet header

20 IP

20 TCP

6 payload

4 frame check sequence

12 interframe gap

Which will give 84 bytes consumed for the whole frame but only 6 bytes being payload.

Using the same formula as in the article but with 10G gives 1250000000/84 = 14880952 frames per second (minimum sized). 14880952 frames with 6 bytes of payload results in 89285714, or around 89 MB per second, which is actually less than 1 GBit Ethernet with full size frames!

89285714 / 1250000000 = 0,07 = the effectivity ratio is about 7 percent, and the rest 93 % is overhead…

Regards, Rickard

Interesting and well written. But it got me thinking… If i had a memcached server and used the jumboframes of 9K, it would mean that it could not serve more then 13830 replies per second. (in theory). But a ‘normal’ framesize would offer me 81K potential answers. Since memcached data is often very small, it would be better to have no or smaller jumboframes, depending on the type of server/data you are running/serving. More bandwith does not always translate to more replies to clients.

For example a counterstrike or WoW server would get loads of packages with a very tiny bit of information. So my conclusion is “bigger isn’t always better – or faster”

Jumbo frames only set the maximum size possible. If you are sending less than a standard frame size then it does not matter. If you are sending 1.5x as much then you save one frame worth of overhead using jumbo frames.

Actually if you are sending one single byte MORE than would have fitted into a standard sized frame you will save on “frame worth of overhead”. 🙂

What about the encoding of the bits on the transmission. I believe this will also lower the overall efficiency since each “bit” transmitted is not directly related to a “data bit” your measuring above.

Thank you for your comment Fred. Could you expand some what you mean?

As far as I can tell the 1 Gigabit in gigabit ethernet is already corrected for low level encodings like trellis-coding. In other words the 1 gbps is already the product of the 125 MBaud symbolrate multiplied with the 8 bit per symbol. Put in your wording gigabit ethernet actually uses 1.5Gbps “bits” to deliver 1.0Gbps “data bits”.

The Gigabit Ethernet uses the 8/b/10b encoding, so the actual flow for data should be

1000Mbit /10 =100MByte right? not 125MByte/s

See Sebastians answer above, I belive this is already “counted for”.

Regards, Rickard

You nailed it, very clear explanation!

Hello,

Maybe you can help. We have written a hardware Ethernet IP module for our Arria V FPGA to be able to send UDP packets to the PC at full rate. We average about 115 Mbyte/sec payload speed over a TByte or so to a PC that is doing nothing else. However this turns out to be meaningless because the PC stops receiving packets if it goes and does something else – eg open a disk drive, or do a compute intensive job. There seems no standardized way to throttle packet flow other than the higher level approach used by TCP/IP, but here sending back ack packets has such high latency that the throughput gets limited to somewhat less than 50 Mbytes/sec. Do you know of a standardized way to implement flow control that every installation could use which does not impact onto throughput?

Crystal clear, thank you Rickard !

Hi, just came across this article and had this question. If instead of 1538 bytes, I use 538 bytes (MSS of 500 bytes) in the calculations mentioned, and I get an efficiency very close to that when using a 1538 byte frame. So doesn’t this indicate that a much lower MTU like 500 doesn’t cause much of a difference in performance (throughput, efficiency) when compared to full/normal sized ethernet frame? or am I missing something?? Thank you.

Hello Josh, thanks for your comment.

As each Ethernet frame, used together with IPv4 and TCP, has a total overhead of 78 bytes (preamble, ethernet header, IPv4 header, TCP + interframe gap) and does not change with the total frame size the efficiency will be dependent mainly on the total size.

If running “Jumbo Frames” (Total size 9038) gives overhead 1 % (78/9038).

In ordinary Ethernet (Total size 1538) overhead is 5 % (78/1538).

If, from your example, using a total size of 538 bytes the overhead per frame would be 15 % (78/538). (MSS only 460 bytes).

Best regards, Rickard

Thank you, Rickard. Makes sense now.

So all else remaining the same, is it always accurate in saying that lower the MTU, lesser will be the available throughput? (more overhead). In the above example, if the total size was 538 bytes, the throughput would be around 111 MB/sec I think.

Also, the maximum possible throughput on Gigabit ethernet (if NOT using jumbo frames) will be 118 MB/sec (or 944 Mbps) and can never be more than that, correct?

Other than overhead calculated on the basis of the frame size, this calculation does not take into account other issues like packet loss, etc. So I assume in real life, these ‘additional’ factors is what that will cause the actual throughput to be even lesser than the maximum possible of 944 Mbps, am I right?

Thank you.

Hello Josh,

yes it is a correct statement that the less user data inside the frame (does not need to be “MTU”, which is the physical limit, it could be an application which just sends little data in each packet) the lower total throughput of user data due to the headers (overhead) always remains static.

There would absolutely be other factors in play, such as packet loss as you mention, however that is more from a “logical” point of view in regards to transport of certain logical data – which Ethernet itself does not understand or care of. The consumed bandwidth would remain the same even if half of the packets would be lost in transmit so to say.

Another factor in regards to this is the CPU overhead coming from more packets to process in the data path but with less content. Each frame needs a non-trivial amount of checksum calculations, sequence numbers handling, various header fields, lookups in switch MAC forwarding tables and searching router routing tables and more. From that perspective it would also be much more effective to carry as much data as possible inside each frame to reduce this work.