LACP support is available on ESXi 5.1, 5.5 and 6.0. How to configure and verify the new LACP NIC Teaming option in ESXi. LACP – Link Aggregation Control Protocol is used to form dynamically Link Aggregation Groups between network devices and ESXi hosts.

With vSphere 5.1 and later we have the possibility of using LACP to form a Link Aggregation team with physical switches, which has some advantages over the ordinary static method used earlier. To be able to combine several NICs into one logical interface correct configuration is needed both on the vSwitches and on the physical switches. Some common configuration errors did however this kind of setup somewhat risky, but will now be easier in ESXi 5.x.

On the earlier releases of ESXi the virtual switch side must have the NIC Teaming Policy set to “IP Hash” and on the physical switch the attached ports must be set to a static Link Aggregation group, called “Etherchannel mode on” in Cisco and “Trunk in HP Trunk mode” on HP devices.

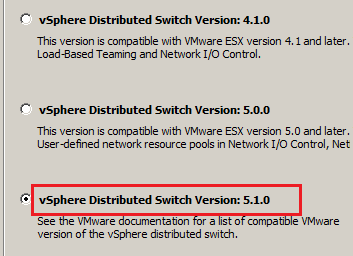

To use dynamic Link Aggregation setup you must have ESXi 5.1 (or later) together with virtual Distributed Switches version 5.1 (or later). This could either be a new Distributed vSwitch or an already existing 4.0, 4.1 or 5.0 switch that is upgraded to version 5.1.

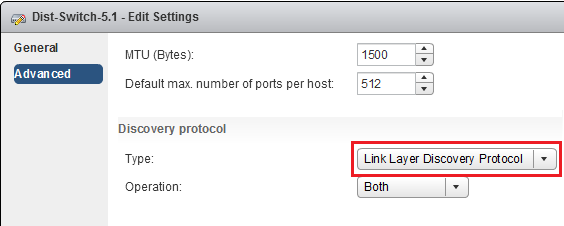

Even if it is not needed technically for LACP, it is always good to enable LLDP to make the configuration on the physical switch simpler. Above is the new configuration box on the Web Client of vSphere 5.1.

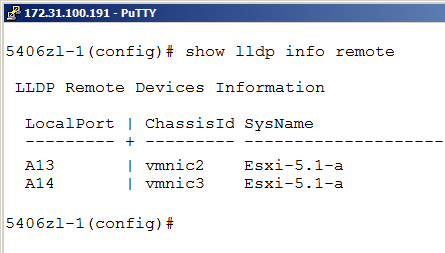

Now it is easy to verify the exact ports on the physical switch attached to the specific ESXi host. Above we can see that vmnic2 and vmnic3 from the ESXi host is connected to certain physical switch ports. This information is needed soon, but we must first setup the LACP connection on the ESXi host.

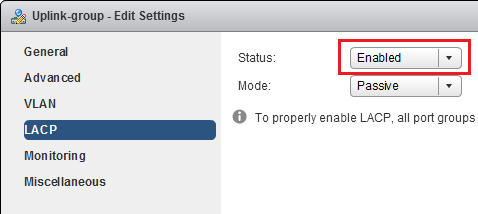

In the Uplink portgroup settings we have the new option of enabling LACP. Note that you must use the Web Client to reach this setting. Change from default Disabled to Enabled to active LACP. We can now select either default “passive” or “active” mode.

Passive means in this context that the ESXi vmnics should remain silent and do not send any LACP BPDU frames unless something on the other side initiates the LACP session. This means that the physical switch must start the LACP negotiation.

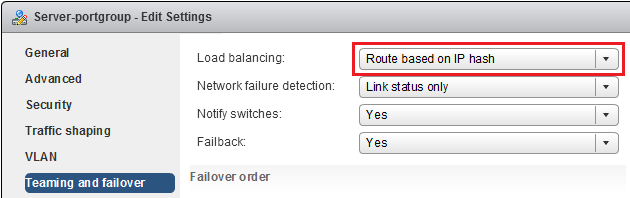

All other portgroups on the Distributed vSwitch must be set to “IP Hash“, just as in earlier releases. The LACP setting is only configured on the Uplink-portgroup.

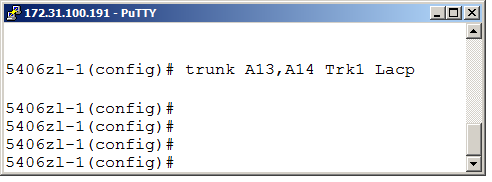

We must now complete the setup on the physical switch. In this example we have a HP Switch (Procurve 5406-zl) and the commands shown here will work on all HP switches (excluding the A-series). As displayed earlier we could use the LLDP feature to find the actual switchports connected to the ESXi host, in this example the ports are called A13 and A14.

In the HP command line interface a collection of switch port in a Link Aggregation Group is called “Trunk“. (“Port Channel” or “Etherchannel” on Cisco devices.) The command above creates a logical port named Trk1 from the physical ports A13 and A14 using LACP.

The default mode on HP LACP is active, which means that the switch will now start negotiating LACP with the other side, here our ESXi 5.1 host.

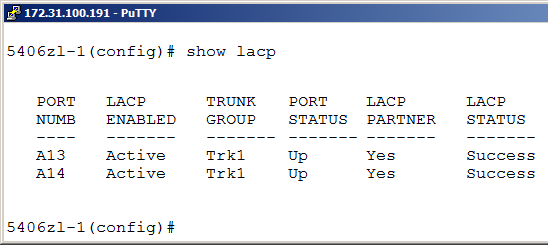

Now we get to the real advantage of the dynamic LACP protocol: we could verify the result. In ordinary static trunk/etherchannel we can not really know if the links are correctly configured and cables properly patched, which is often responsible for a number of network issues. However, with LACP we could see if the setup of link aggregation group was completed or not.

With the command “show lacp” we could see that the physical switch has successfully found LACP partners on both interfaces and that the link is now up and running in good order. This is the great feature of LACP, meaning that links will not get up in running order if the other side is not a LACP partner.

This prevents lots of potential network disturbances and makes it much more easy to verify link aggregation configuration. It should be noted that LACP, despite popular belief, does not make the network load distribution any different or more optimized. Chris Wahl has a good writeup demystifying many LACP myths here.

The main benefit of LACP is to avoid misconfigured and non-matching switch-to-switch settings. LACP is as such a welcomed new feature in ESXi 5.1, however is only available on the Distributed vSwitches.

Any idea if we are going to see active load balancing done now that LACP is supported? Thanks for the great articles.

Hello Ryan and thank you for your comment.

The new LACP option will not change the way the actual network load balancing is done, as LACP is only responsible for the link aggregation setup. How the load distribution is done depends on the two switch sides, which actually could use different ways! The VMware virtual switch will continue to load balance based on a hash on the source and destination IP addresses for outgoing frames.

However, the physical switch could use something else, perhaps based on MAC addresses, or IP as the vSwitch or even TCP/UDP port numbers. This will depend on the specific switch type and in some cases the configuration.

Hi Rickard,

Any ideas on how many LACP channels are allowed on a vDS in 5.1? I can’t really find any documentation on this anywhere.

Any hints would be much appreciated.

You could have 1 Channels for dvSwitch and max of 4 active ports

That should be per ESXi host, but the Distributed vSwitch could span many hosts.

Hello Michael,

as the practical effect will be that each ESXi host sets up a separate LACP connection with the physical switch I belive (but have not seen this confirmed) that the maximum number is 350, which is the number of hosts allowed on a single virtual Distributed vSwitch.

Hi, do you happen to know whether LACP is compatible with the load-balancing policy “Route based on physical NIC load” (i.e. Load-based Teaming)?

Thanks,

-Loren

Hello Loren,

no, LACP could not be used with either default Port ID or Load-based Teaming. Both the physical and the virtual switch using LACP must allow all MAC addresses to be sent over all interfaces simulatenously and the only vSphere NIC Teaming Policy that is compatible with this is IP Hash.

Regards, Rickard

I am very dissapointed that the option to control LACP is not available in the vSphere client. The web client is still considerably behind in some areas and lacking major sections of configuration UI, yet now features are being added to it not in parity with the normal vSphere client? Insane!

My understanding is that no new features in vSphere 5.1 will be configurable through the vSphere Client, but only the Web Client. As the vSphere Client has been a popular tool for many years it might be a bit too quick to now force customers into the Web Client.

Hello,

i am using a Dlink DGS-1100-16 switch.

I have problems with connecting 2 esxi-switches and 1 storage-device (qnap) with link aggegration (always one device has time-outs).

I can only configure trunked ports on this switch and have no possibilities for static/dynamic trunking.

Is my switch the problems for the time-outs or could it be another problem?

Thx

Hello!

One possible cause is the word “trunk” which could mean both Link Aggregation, but also VLAN (802.1Q) tagging, depending on the switch vendor.

If you are sure that this specific DLink device uses the word “trunk” for static Link Aggregation with no LACP then it should work against the ESXi vSwitches, as long as you have configured the NIC Teaming Policy for the vSwitch to “IP Hash”.

Do you use ESXi 5.0 or 5.1 and standard vSwitches or Distributed?

Regards, Rickard

Link Aggregation (Standards based world)

Etherchannel (Cisco World)

Protocols Used

LACP(Inter Switch Vendor and Servers)

PAgP (Cisco Proprietary)

TRUNK

A link on which multiple VLANS are allowed dues to the ability to differentiate traffic by Tagging

ISL (Cisco Proprietary)

802.1q (IEEE Standard for VLAN Tagging

There is also 802.1ad (AKA QinQ)

A TRUNK can also be an aggregation of links or Etherchannel.

I could be wrong, but can’t you use LBT or MAC Hash WITHOUT any form of Switch Based teaming.

That would avoid the misconfig as a problem. All you need is standard dot1q +IF+ you need multiple VLANs.

This is proven because plenty of people use these teaming settings with NICs on 2 separate switches without vPC/IRF/etc to form a single virtual switch.

Hello Dan,

thanks for your comment. You are certainly correct that you could use Port ID, Src MAC or LBT without any teaming on the switch side. In most cases this would be good enough for both basic load distribution and fault tolerance.

The advantage of IP Hash, with or without LACP, is that a single VM could get the total bandwidth of all network cards in the team, which is not possible with the other NIC teaming policies.

Cisco recommends setting both sides to active/active vs. active/passive. Any thoughts on this setup vs. active/passive?

Steele

From my point of view both ways should work equally well. By setting the ESXi side to passive we could be sure that no negotiation will start until the physical switch is ready, but there is nothing wrong at all with both sides set to active.

As long as we do not have a passive – passive setup it will work fine.

Hi Rickard, Thanks for the article.

I have one question about DVS 5.1.

What protocol do you recommend when the virtual machine will use in maximum 1GB of pNIC. IP Hash with LACP or Etherchannel? Or Route base on NIC LOAD – LBT?

I ask you because that LBT NIC LOAD have monitor in the algorithm and can change the interface when consuming about 70% and IP HASH don´t think have equal monitoring.

Thanks for your attention.

Regards,

Eder Rodrigues

Hello Eder, and thank you for your question.

To me the only reason to consider using IP Hash, with or without LACP, is when you have virtual machines that need to consume more bandwidth than a single pNIC can give them.

If I understand your situation that this is not the case I would recommend to use Load Based Teaming. The LBT policy will as you say make sure that the VMs are somewhat fairly spread over the available pNICs and could be “moved” if needed. However, one single VM could never get more bandwidth than a single pNIC has, but has a simpler configuration on the physical switches, i.e. no Link Aggregation / Etherchannel setup needed.